software architettonici

EXTON, PA, 15 maggio 2008 - l'autorità svedese AF-Kontroll di controllo ha approvato le ultime versioni Bentley AutoPIPE - il software principale per gli sforzi stridenti calcolatori di codice, carichi e deviazioni nei termini statici e dinamici di caricamento - in conformità con en europea 13480. The approval allows users to design their projects certified Equipment Directive (PED) European pressure to benefit from the latest product enhancements to Bentley AutoPIPE. Other approvals and compliances Bentley AutoPIPE received include ISO 9001, NQA-1 nuclear 10CFR21; ASME N45.2; GOST Approval of projects for the oil, gas and power in Russia, and certification by Stoomwezen Register Lloyd Nederland BV.

Bentley AutoPIPE is a complete and integrated solution striking design and analysis that provides improved productivity and increased interoperability of the other computer-aided design (CAD) and analysis software, including STAAD.Pro. Analizza i sistemi di tutta la complessità e fornisce i dispositivi speciali per l'analisi sepolta della conduttura, il caricamento dell'onda, l'acqua o il maglio a vapore, il tubo di FRP/GRP e l'interazione incorporata struttura/del tubo.

Circa la famiglia di prodotto di cae del Bentley

La famiglia di prodotto di progettazione (CAE) assistita dal computer del Bentley, compreso Bentley AutoPIPE, Bentley PlantFLOW, PULS di Bentley e Bentley WinNOZL, ora fa parte delle soluzioni strutturali e stridenti del Bentley. Queste soluzioni comprendono il software internazionale di RAM e le serie di prodotti di STAAD.Pro per l'analisi ed il disegno di ingegneria per la pianta, la costruzione e le applicazioni civili. Per più information about the product line of Bentley's STAAD and RAM, please visit www.bentley.com / structural.

AF group has 2,500 employees and about 150 work for the AF-Kontroll. The AF-Kontroll provides information services and accredited body for the certification of projects do meet the requirements of European Pressure Equipment Directive (PED 23/97/EC).

About Bentley Bentley Systems, Incorporated provides software for the lifecycle of the world. The company's comprehensive portfolio for the building, plant, civil, and geospatial verticals spans architecture, engineering, construction (AEC) and operations. With revenues now surpassing $ 400 million annually and more than 2,400 colleagues globally, Bentley is the leading provider of AEC software to the Engineering News-Record top design firms and major owner-operators and was named the supplier no. 2 in the world of software solutions for GIS / geospatial in a Daratech research study.

3d illustrations render architecture

For more information, visit www.bentley.com.

Wednesday, May 21, 2008

Wednesday, May 14, 2008

How To Fly On Poptropica Superpower Island

servizi

External representation

Professional service for the creation of photorealistic images of parts of the property. Optimal still sell le parti del bene immobile nella fase del progettuale o in costruzione. La rappresentazione di interno e voi forniscono

Il servizio per la creazione delle immagini del fotorealistiche di interno che cucite alcuno, il salotti, i soggiorni, gli uffici, le discoteche, il basamento ed i centri le vendono. Esattezza dei materiali e degli oggetti dell'arredamento. Modella 3D sulla misura

Attraverso il modellazione o l'espulsione degli oggetti bidimensionali 2D, siamo in una posizione a dare la forma agli oggetti di tutta la natura. Prodotti meccanici, oggetti alta tecnologia, oggetti di uso quotidiano, anelli, profumi, ecc Giro virtuale a 360° Navigazione virtuale 3D, un sistema di simulazione di navigazione all'interno delle availability of a part of the property. An interactive product of great benefit to the sale of shares of real estate.

Cross Section 3D of the apartments

Extension programs in cross-section! Choose which prospect wants to achieve representation in cross section or request the three-dimensional model that can run by itself before the eyes of your customers. Plant some economies in the two-dimensional 2D

surprisingly, as it will be .... Shows the representation of the effect of the atmosphere surrounding your program! Volume rendering Volume rendering

are ideal to give an idea of \u200b\u200bspace and structure of the program. Often they are often used to simulate the classic monochrome and color plastic to give the program a degree of particular intermediate. Billboards

by the yard's support for representation on the board is without a doubt billboard by the yard but we also ask you from brochure, folders, folding and pressing of all types.

Multimedia presentations are ideal for viewing in a conference, or simply as shoroom sales support. From the simple to the multimedia slide or imaginative animations of the player. The media may be multiple CDs, DVDs, paper CD, CD Miniums, video cassettes. Internet sites of the Entrust presentation

website of your program to our experts! It will be difficult to launch a springboard for the advance of your piece of real estate or tui product. Rendering-3d.com is in a position to offer a complete service: domain registration, creation, Publication of the place and positioning on search engines.

External representation

Professional service for the creation of photorealistic images of parts of the property. Optimal still sell le parti del bene immobile nella fase del progettuale o in costruzione. La rappresentazione di interno e voi forniscono

Il servizio per la creazione delle immagini del fotorealistiche di interno che cucite alcuno, il salotti, i soggiorni, gli uffici, le discoteche, il basamento ed i centri le vendono. Esattezza dei materiali e degli oggetti dell'arredamento. Modella 3D sulla misura

Attraverso il modellazione o l'espulsione degli oggetti bidimensionali 2D, siamo in una posizione a dare la forma agli oggetti di tutta la natura. Prodotti meccanici, oggetti alta tecnologia, oggetti di uso quotidiano, anelli, profumi, ecc Giro virtuale a 360° Navigazione virtuale 3D, un sistema di simulazione di navigazione all'interno delle availability of a part of the property. An interactive product of great benefit to the sale of shares of real estate.

Cross Section 3D of the apartments

Extension programs in cross-section! Choose which prospect wants to achieve representation in cross section or request the three-dimensional model that can run by itself before the eyes of your customers. Plant some economies in the two-dimensional 2D

The plan is valued for their ease of exposition. Contain easy, colorful and feasible in many times expressed have a good product and economic interest of safety for your program.  3D animations and simulations

3D animations and simulations

The three-dimensional animation have a very high visual effect, especially in the field of architecture. They are perfect for multimedia products, point of advertising, video, Internet, TV. Environmental impact assessment of their surprisingly, as it will be .... Shows the representation of the effect of the atmosphere surrounding your program! Volume rendering Volume rendering

are ideal to give an idea of \u200b\u200bspace and structure of the program. Often they are often used to simulate the classic monochrome and color plastic to give the program a degree of particular intermediate. Billboards

by the yard's support for representation on the board is without a doubt billboard by the yard but we also ask you from brochure, folders, folding and pressing of all types.

Multimedia presentations are ideal for viewing in a conference, or simply as shoroom sales support. From the simple to the multimedia slide or imaginative animations of the player. The media may be multiple CDs, DVDs, paper CD, CD Miniums, video cassettes. Internet sites of the Entrust presentation

website of your program to our experts! It will be difficult to launch a springboard for the advance of your piece of real estate or tui product. Rendering-3d.com is in a position to offer a complete service: domain registration, creation, Publication of the place and positioning on search engines.

Platypus Pet In The U.s.

Illustazioni immobili per cantieri edili con risoluzione iperrealistica

Looking for an efficient way to display your line of existing products or design? You need to communicate through a 3D animation operation or implementation phases of your product? You need a new graphics update for the logo, business website? Looking for an illustration for a magazine, brochure, or for the cover of a book? Want to represent your project from design engineer to render hyper? If this is so: Welcome to Render Architect studio!

the Architect Render studio specializes in the creation of models, drawings and three-dimensional animations of high quality for every need. We attach great importance to the care of the details and we always aim to exceed the expectations of our customers. Contact us today and discuss what the Architect Render studio can do for you

http://milano.kijiji.it/c-Servizi -Computer-drawings-architectural-for-the-yard-buildings-W0QQAdIdZ51478427

http://milano.kijiji.it/c-Servizi -Computer-drawings-architectural-for-the-yard-buildings-W0QQAdIdZ51478427

http://milano.kijiji.it/c-ViewAdLargeImage?AdId=51478427&img=http://kijiji.ebayimg. com/i22/01/k/000/7a/34/f3a9_18.JPG? set_id = 1C4000

Looking for an efficient way to display your line of existing products or design? You need to communicate through a 3D animation operation or implementation phases of your product? You need a new graphics update for the logo, business website? Looking for an illustration for a magazine, brochure, or for the cover of a book? Want to represent your project from design engineer to render hyper? If this is so: Welcome to Render Architect studio!

the Architect Render studio specializes in the creation of models, drawings and three-dimensional animations of high quality for every need. We attach great importance to the care of the details and we always aim to exceed the expectations of our customers. Contact us today and discuss what the Architect Render studio can do for you

http://milano.kijiji.it/c-PostersOtherAds-W0QQAdIdZ51478427

http://milano.kijiji.it/c-Servizi -Computer-drawings-architectural-for-the-yard-buildings-W0QQAdIdZ51478427

http://milano.kijiji.it/c-Servizi -Computer-drawings-architectural-for-the-yard-buildings-W0QQAdIdZ51478427 http://milano.kijiji.it/c-ViewAdLargeImage?AdId=51478427&img=http://kijiji.ebayimg. com/i22/01/k/000/7a/34/f3a9_18.JPG? set_id = 1C4000

Tuesday, May 13, 2008

How Do You Get Rid Of A Gential Rash

RENDER ARCHITETTO

Il gruppo RENDER ARCHITETTO funziona a partire dagli anni 90nel campo della rappresentazione virtuale dell'architettura e modifiche del pae saggio con le tecniche tridimensionali di informatica. La metodologia del lavoro nel tempo è stata guidata sempre dal tentativo preciso di offrire un prodotto realistico ed altamente evocativo ma quella o allo stesso tempo versato loro e digiunare in esso è fatta di produzione e nel grado quindi per interagire facilmente con i requisiti del processo completo della progettazione.

1. riduzione dei tempi di produzione. Per per fare fronte che i requisiti del committente vuole dire per potere realizzare le immagini altamente realistiche a complessità di alta risoluzione ed elevated during periods of extremely tight production. It also means the power to easily interact with the planners during the operation without compromising the quality of the finished product. This can only be achieved with a production system based on strict work procedures tested and standardized on the fruit of years of experience.

2. check on the systems of representation of the green. We have developed systems capable facilities to ensure consistent realistic whole administration, performance and integration total in the program at different scales of detail with the times of operation. Our scope is that one to represent the green with the techniques "To read" but at the same time highly realistic and the painter who tries to prevent unsurmountable limitations of traditional methods of three-dimensional representation.

3. check on the performance of those surfaces. Through the development of procedural programs to Oc made for each program are in a position to ofrire that an infinite variety of solutions is the representation of the elements of the materials that they acclimate. This method of working ensures the infinity of variations in color and the shape of materials that provide much of the media planner to verify the design.  4. representation of the elements of context and acclimatization of the program. The treatment which is represented the program is also dedicated to the elements of the context they work, architectural and acclimate them. To represent an architectural object can not be separated from surrounding its foreshadowing. Our goal is not quell'da visible objects but that 'to make the images "cured together with the scope to be inserted into the program on a hypothetical real acclimatization.

4. representation of the elements of context and acclimatization of the program. The treatment which is represented the program is also dedicated to the elements of the context they work, architectural and acclimate them. To represent an architectural object can not be separated from surrounding its foreshadowing. Our goal is not quell'da visible objects but that 'to make the images "cured together with the scope to be inserted into the program on a hypothetical real acclimatization.

http://www.renderarchitetto.altervista.org/

Il gruppo RENDER ARCHITETTO funziona a partire dagli anni 90nel campo della rappresentazione virtuale dell'architettura e modifiche del pae saggio con le tecniche tridimensionali di informatica. La metodologia del lavoro nel tempo è stata guidata sempre dal tentativo preciso di offrire un prodotto realistico ed altamente evocativo ma quella o allo stesso tempo versato loro e digiunare in esso è fatta di produzione e nel grado quindi per interagire facilmente con i requisiti del processo completo della progettazione.

1. riduzione dei tempi di produzione. Per per fare fronte che i requisiti del committente vuole dire per potere realizzare le immagini altamente realistiche a complessità di alta risoluzione ed elevated during periods of extremely tight production. It also means the power to easily interact with the planners during the operation without compromising the quality of the finished product. This can only be achieved with a production system based on strict work procedures tested and standardized on the fruit of years of experience.

2. check on the systems of representation of the green. We have developed systems capable facilities to ensure consistent realistic whole administration, performance and integration total in the program at different scales of detail with the times of operation. Our scope is that one to represent the green with the techniques "To read" but at the same time highly realistic and the painter who tries to prevent unsurmountable limitations of traditional methods of three-dimensional representation.

3. check on the performance of those surfaces. Through the development of procedural programs to Oc made for each program are in a position to ofrire that an infinite variety of solutions is the representation of the elements of the materials that they acclimate. This method of working ensures the infinity of variations in color and the shape of materials that provide much of the media planner to verify the design.

http://www.renderarchitetto.altervista.org/

Card Game Frustration

Rappresentazione nell'architettura con Revit 2009

L 'headlinerper Revit Architecture 2009 is the replacement of the aging of motor Accurender with mental ray. This will definitely improve the overall quality of your performance against Accurender. Remember, mental ray is the same rendering engine that resides in 3dsmax and across the whole of the Autodesk product portfolio, so you can rest assured the quality will be high.

L 'headlinerper Revit Architecture 2009 is the replacement of the aging of motor Accurender with mental ray. This will definitely improve the overall quality of your performance against Accurender. Remember, mental ray is the same rendering engine that resides in 3dsmax and across the whole of the Autodesk product portfolio, so you can rest assured the quality will be high.

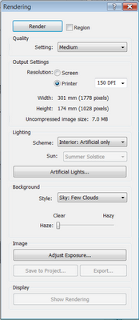

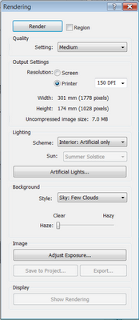

When you are in a 3D view or a view, you will notice an additional tool in the control bar of view. The new icon of the teapot allows you to quickly access the dialog representation. Alternatively, you can still access the tools of representation from the drawing toolbar or pull down menu. The new provision of representation of the dialogue is excellent, easy to use and understand. Autodesk someone really listened to what the architect and designers require when it comes to images of representation.

The new provision of representation of the dialogue is excellent, easy to use and understand. Autodesk someone really listened to what the architect and designers require when it comes to images of representation.

Revit Architecture 2009 also includes preset which makes the draft, low, medium, high and the best allowing you to get quick results. However, you also have the ability to drill down and create your own custom setup for a particular view if you like. Generally increase (or turn on) any of these adjustments increases the image quality yield. The increase in these values \u200b\u200bor the multiple adjustments may increase exponentially making the time, so you have been warned! But on a positive note, if you have a processor dual core or quad core in your workstation or laptop, then the mental ray render will use these additional centers. One thing I found was that there was no way I could transfer the custom setting between views, which is a shame. I'm sure someone will clarify if I wrong.

All lamps are now and will use a photometric archiving SIC to define the parameters of illumination. If you open a suitable family present clear notice that you have the ability Specifies the SCI filed for light fitting. Revit Architecture 2009 uses this information from the file of the SIC to define the geometric shape of the source.

The materials provided within Revit Architecture 2009 by material properties have been reassigned within the mind. These materials are much more realistic and are stored as part of the project file. If you go to the dialog box of materials, settings pull down menu> materials, you will notice that this dialog has been overhauled. The Appearance tab allows you to control the representation of adjustments for the material you want to define. Revit includes a library of materials standard, but you can also define your own material that if you want to order.

appearance of material: -

Vista library: -

Revit Architecture 2009 also facilitated the process for generating different lighting conditions. You will find the preset for the following circumstances: -

Exterior: Exterior Sun only

: Sun and Artificial

Inside: Artificial only

Interior: Interior

Sun only: Sun and Artificial

Interior: Artificial

only way to prove this I have produced a very simple scene that you can see below. Then I make a scene number of times using some of these presets.

Exterior - Exterior sun only

- artificial sun +

Internal - only

Interior - Interior

artificial sun + sun - only

In general, are very impressed with the new rendering engine. I am positive that new and existing users will get to grips very quickly with this tool and its settings and I'm expecting to see some excellent images from the community of Revit in not too distant future.

L 'headlinerper Revit Architecture 2009 is the replacement of the aging of motor Accurender with mental ray. This will definitely improve the overall quality of your performance against Accurender. Remember, mental ray is the same rendering engine that resides in 3dsmax and across the whole of the Autodesk product portfolio, so you can rest assured the quality will be high.

L 'headlinerper Revit Architecture 2009 is the replacement of the aging of motor Accurender with mental ray. This will definitely improve the overall quality of your performance against Accurender. Remember, mental ray is the same rendering engine that resides in 3dsmax and across the whole of the Autodesk product portfolio, so you can rest assured the quality will be high. When you are in a 3D view or a view, you will notice an additional tool in the control bar of view. The new icon of the teapot allows you to quickly access the dialog representation. Alternatively, you can still access the tools of representation from the drawing toolbar or pull down menu.

The new provision of representation of the dialogue is excellent, easy to use and understand. Autodesk someone really listened to what the architect and designers require when it comes to images of representation.

The new provision of representation of the dialogue is excellent, easy to use and understand. Autodesk someone really listened to what the architect and designers require when it comes to images of representation.

Revit Architecture 2009 also includes preset which makes the draft, low, medium, high and the best allowing you to get quick results. However, you also have the ability to drill down and create your own custom setup for a particular view if you like. Generally increase (or turn on) any of these adjustments increases the image quality yield. The increase in these values \u200b\u200bor the multiple adjustments may increase exponentially making the time, so you have been warned! But on a positive note, if you have a processor dual core or quad core in your workstation or laptop, then the mental ray render will use these additional centers. One thing I found was that there was no way I could transfer the custom setting between views, which is a shame. I'm sure someone will clarify if I wrong.

All lamps are now and will use a photometric archiving SIC to define the parameters of illumination. If you open a suitable family present clear notice that you have the ability Specifies the SCI filed for light fitting. Revit Architecture 2009 uses this information from the file of the SIC to define the geometric shape of the source.

The materials provided within Revit Architecture 2009 by material properties have been reassigned within the mind. These materials are much more realistic and are stored as part of the project file. If you go to the dialog box of materials, settings pull down menu> materials, you will notice that this dialog has been overhauled. The Appearance tab allows you to control the representation of adjustments for the material you want to define. Revit includes a library of materials standard, but you can also define your own material that if you want to order.

appearance of material: -

Vista library: -

Revit Architecture 2009 also facilitated the process for generating different lighting conditions. You will find the preset for the following circumstances: -

Exterior: Exterior Sun only

: Sun and Artificial

Inside: Artificial only

Interior: Interior

Sun only: Sun and Artificial

Interior: Artificial

only way to prove this I have produced a very simple scene that you can see below. Then I make a scene number of times using some of these presets.

Exterior - Exterior sun only

- artificial sun +

Internal - only

Interior - Interior

artificial sun + sun - only

In general, are very impressed with the new rendering engine. I am positive that new and existing users will get to grips very quickly with this tool and its settings and I'm expecting to see some excellent images from the community of Revit in not too distant future.

Toe Infection After Pedicure

Modeling and Rendering di architettura dalle fotografie

Modeling and rendering architecture from photographs: A method

hybrid geometry and image-based

University of California a Berkeley1

ESTRATTO

Presentiamo un nuovo metodo per la modellistica e la rappresentazione delle scene architettoniche esistenti da un insieme sparso delle fotografie tranquille. Il nostro metodo modellante, che unisce sia tecniche geometria-geometry-based che immagine-image-based, ha due componenti. La prima componente è un photogrammetricmodelingmethodwhich facilita il recupero della geometria di base della scena fotografata. Il nostro metodo modellante fotogrammetrico è efficace, conveniente e robusto perché sfrutta i vincoli che sono caratteristici delle scene architettoniche. La seconda componente è una procedura stereo modello-model-based, che recupera come la scena reale devia dal modello di base. Usando il modello, la nostra tecnica stereo robust retrieves the exact depth of the coupling-off widely-spaced image. Consequently, our approach can model large architectural environments with far fewer photographs image-shaping methods that image-based currents. For the production of representations, we present the structure view-view-dependent mapping, a method of compositing multiple views of a scene that better simulates the geometry on the basic models. Our method can be used to retrieve the templates for use in geometry-based or image-based in making the systems. We present the results showing the ability of our method to generate realistic renderings of architectural scenes from viewpoints far from the original photographs.

CR Descriptors: I.2.10 [Artificial Intelligence]: Vision and Scene Understanding - modeling and recovery of physical attributes; I.3.7 [Computer Graphics]: Three-dimensional Graphics and Realism - color, shading, shadowing and texture I. 4.8 [Image Processing]: Scene Analysis - Stereo; J.6 [Computer-aided design]: Computer aided design (CAD). 1 INTRODUCTION

Efforts to model the appearance and dynamics of the real world have produced some of the most compelling imagery in computer graphics. In particular, efforts to model architectural scenes, the cathedral of Amiens to the pyramids of Giza Soda Hall Berkeley, have produced impressive flybys of inspiration and progressions. Clearly, is an attractive architecture to be able to explore the world unencumbered by fences, by gravity, habit, or jetlag.

Recently, generate models directly from photographs has received increased interest in computer graphics. Since the images reali sono usate come input, un sistema immagine-image-based (fig. 1c) presenta un vantaggio nella produzione delle rappresentazioni photorealistic come uscita. Alcuno del più promising di questi sistemi [16, 13] conta sulla tecnica di dispositivo ottico del computer di stereopsis di calcolo per determinare automaticamente la struttura della scena dalle fotografie multiple disponibili. Di conseguenza, tuttavia, questi sistemi sono soltanto forti quanto le procedure stereo underlying. Ciò ha causato i problemi perché le procedure stereo avanzate hanno un certo numero di debolezze significative; in particolare, le fotografie devono sembrare molto simili affinchè i risultati certi siano ottenute. A causa di questa, l'image-basedtechniques-basedtechniques current is to use lots of images spaced and in some cases takes significant amounts of user input so that each pair of stereo image supervise the procedure. In this structure, block data for a realistic model renderable would require an impractical number of images to derive the depth and spaced from the photographs may require impractical amounts of user input. These concessions to the weakness of the procedures for creating stereo predict evil on a grand scale, freely navigable virtual environments from photographs.

Our research aims to make the process of modeling architectural scenes

most convenient, more accurate and more photorealistic methods currently available. To do this, we developed a new method that draws on the concentrations of both methods-geometry geometry-based image-image-based, as shown in Fig. 1b. The result is that our approach to modeling and to make the architecture requires only a sparse set of photographs and can produce realistic representations from arbitrary viewpoints. In our method, a geometric model of the basic architecture is recovered via interaction with a system easy to use photogrammetric modeling, novel views are generated using structure-view view-dependent and that the track geometry can be further recuperato automaticamente attraverso corrispondenza stereo. Le immagini finali possono essere rese con le tecniche di rappresentazione immagine-image-based correnti. Poiché soltanto le fotografie sono richieste, il nostro metodo a modellare l'architettura è nè dilagante nè esso richiede i programmi architettonici, il cad modella, o strumentazione specializzata quali attrezzature d'esame, i sensori di GPS o i dispositivi d'esplorazione della gamma.

1.1 Priorità bassa e lavoro riferito

Il processo di recupero della struttura 3D dalle 2D immagini è stato un'attività centrale all'interno di dispositivo ottico del computer ed il processo di rappresentazione delle tali strutture recuperate è un oggetto che riceve l'interesse increased in computer graphics. Although there is no general technique to derive models from images, four specific areas of research have provided results that are applicable to the problem of modeling and representation of architectural scenes. They are: Camera calibration, structure from motion, stereo matching and image-representation image-based. 1.1.1 Camera Calibration

Recovering 3D structure from images becomes a simpler problem when the cameras used are calibrated, ie, the mapping between image coordinates and directions on each camera is known. What track is determined by, among other parameters, focal length of the camera and its model of radial distortion. The calibration of the camera is a problem with which examined both in photogrammetry and in computer vision, some successful methods include [20] and [5]. While there has been recent progress in the use of uncalibrated views for 3D reconstruction [7], we found the calibration of the camera to be a straightforward process that considerably simplifies the problem. 1.1.2 Structure

motion

Given the 2D projection of a point in the world, its position in 3D space could be anywhere on a beam that extends out in a particular direction from the optical center of the camera. Tuttavia, quando le proiezioni di un numero sufficiente dei punti nel mondo sono osservate nelle immagini multiple dalle posizioni differenti, è teoricamente possibile dedurre le posizioni 3D dei punti così come le posizioni delle macchine fotografiche originali, fino ad un fattore sconosciuto della scala.

Questo problema è stato studiato nella zona di fotogrammetria per lo scopo principale della produzione dei programmi topografici. In 1913, Kruppa [10] ha dimostrato il risultato fondamentale che fornito due opinioni di cinque punti distinti, uno potrebbe recuperare la rotazione e la traduzione fra le due posizioni della macchina fotografica così come le posizioni 3D dei punti (fino ad un fattore di scala). Da allora, le funzioni matematiche and algorithmic problem have been explored from the fundamental work of Ullman [21] and Longuet-Higgins [11], in the early 80's. Descriptions of the book of FAUGERAS 6] [the state of the art from 1992. So far, a key realization was that the restoration of the structure is very sensitive to noise in image measurements when the translation of the positions available camera is small.

attention has turned to using more than two views with the methods of image flow as [19] or recursive methods (eg [1]). [19] shows excellent results for the camera case spelling, but the direct solutions for the case of perspective remain elusive. The procedures for the linear problem can not generally

to use all available information while minimizing non-linear methods are prone to the difficulties as a result of local minima in parameter space. An alternative formulation of the problem [17] use lines rather than points as measures of image, but concerns have been declared previously indicated it remains largely valid. To do computer graphics, there is still another problem: the models recovered from these procedures consist of scattered areas of point or line segment specific, which are not directly renderable as 3D solid models.

In our method, we exploit the fact that we trying to recover the geometric models of architectural scenes, three-dimensional point we do not arbitrarily set. This allows us to understand the additional constraints not typically available to structure the procedures of movement and to overcome the numerical instability problems that plague these methods. Our method has proved useful in an interactive system for building architectural models from photographs. 1.1.3 Stereo Correspondence

The geometric theory of structure from motion is assumed that one can solve the probl em of correspondence which is to identify the points in two or more images that are projections of the same point in the world. In humans, the corresponding points in the two immagini un po'differenti sulle retine sono determinati dalla corteccia visiva nel processo denominato stereopsis binoculare.

Gli anni di ricerca (per esempio [2, 4, 8, 9, 12, 15]) hanno indicato che determinare le corrispondenze stereo dal calcolatore è problema difficile. I metodi correnti riescono generalmente soltanto quando le immagini sono simili nell'apparenza, come nel caso della visione umana, che è ottenuta solitamente usando il camerasthat è appropriatamente orientata riguardante gli oggetti nella scena. Quando la distanza fra le macchine fotografiche (spesso denominate la linea di base) diventa grande, emerge nei gradi differenti dell'esposizione di immagini di modelli foreshortening e differenti dell'occlusione e di grandi differences in their positions in the two images, which makes it much more difficult for the computer to determine the correct stereo matches. Unfortunately, the alternative of improving the stereo matching using images taken from nearby locations have the disadvantage that the depth of calculation becomes very sensitive to noise in image measurements.

In this paper, we show that having an approximate model of the photographed scene makes it possible to establish robust correspondences from stereo images taken from widely varying points of view. Specifically, the model allows us to deform the image to eliminate uneven foreshortening and occlusion to predict important cases first of trying to find matches.

1.1.4 Image-Image-Based Representation

In a system of image-representation image-based model consists of a set of images of a scene and their corresponding depth programs. When the depth of each point in an image is known, the image can be re-made from around the next projecting point of view of image pixels to their proper positions and 3D reprojecting on a new image plane. Thus, a new image of the scene is generated by deforming the images according to their plans in depth. Main attraction of the image-image-based representation is that it offers a method of representing arbitrarily of complex scenes with a constant amount of computation required per pixel. Using this property, [23] demonstrated that regularly spaced synthetic images (with their programs of depth computed) may be deformed and composited in real time to produce a virtual environment.

More recently, [13] presented a system of image-representation image-based real-time and used the panoramic photographs with depth was computed, in part, from stereo correspondence. An identification card was the one who brings out some estimates of depth from stereo is "very difficult". The method could nevertheless achieve acceptable results for views using near you placed to help the recovery of stereo depth: the correspondencemap for each pair of pictures has been seeded with 100 to 500 matches user-supplied point and also post-processed.

Even with the assistance of user, the images used have yet to be properly oriented, the larger the baseline described in the paper was five feet.

The requirement that samples be close together is a serious limitation to generating a freely navigable virtual environment. Look at the size of just one block would require thousands of panoramic images spaced five feet apart. Clearly, it is impractical to buy so many photographs. Moreover, even a rack full of ground-basedphotographs-basedphotographs only allow representations which are generated from within a few feet of the original level of the camera, precluding all fly-bys of the virtual scene. The extension of the dense grid of images in three dimensions clearly would make the acquisition process even more difficult. The method described in this paper takes advantage of the structure in architectural scenes in a way that requires only a sparse set of photographs. For example, our method has made it a virtual fly-fly-around of just twelve photos from a construction standard. 1.2 Description

In this paper we present three new modeling techniques and representations: photogrammetric modeling, structure-view view-dependent mapping and model-model-based stereo. We show how these techniques can be used in conjunction to make a convenient, accurate and photo-realistic modeling and rendering architecture from photographs. In our method, the program photogrammetric modeling is used to generate a volumetric model of the basic scene, which is then used to force stereo matching. Our information of the compounds of the method of representation from multiple images with the view-dependenttexturemapping-dependenttexturemapping. Our method succeeds because it cut the operation of modeling from images in which tasks are easily accomplished by one person (but not by a computer algorithm) and tasks that are easily carried out by a computer algorithm (but not by one person).

In Part 2, we present our photogrammetric modeling approach. Essentially, we have altered the structure from motion problem and not the recovery of specific point coordinates, but as the recovery of parameters of a forced hierarchy of parametric primitives. The result is that the exact architectural models can be recovered from just a few photographs and robust with a minimum number of matches user-supplied.

In Section 3, we present the structure view-view-dependent mapping and show how it can be used to make the model realistically recovered. Unlike the traditional structure-texture-mapping, in cui un'immagine statica singola è usata per colorare in ogni fronte del modello, il tracciato vista-view-dependent di struttura interpola fra le fotografie disponibili della scena secondo il punto di vista dell'utente. Ciò provoca le animazioni più realistiche che migliori specularities della superficie di bloccaggio e particolare geometrico unmodeled.

Infine, nella parte 4, presentiamo la stereotipia modello-model-based, che è usata per raffinare automaticamente un modello di base di una scena fotografata. Questa tecnica può essere usata per recuperare la struttura di ornamentation architettonico che sarebbe difficile da recuperare con la modellistica fotogrammetrica. In particolare, mostriamo quello proiettare gli accoppiamenti delle immagini su un modello approssimativo iniziale permette che le tecniche stereo convenzionali robusto recuperino le misure molto esatte di profondità dalle immagini con i punti di vista ampiamente varianti.

Modellistica fotogrammetrica 2

In questa sezione presentiamo il nostro metodo per la modellistica fotogrammetrica, in cui il calcolatore determina i parametri di un modello gerarchico dei primitivi polyhedral parametrici per ricostruire la scena architettonica. Abbiamo realizzato questo metodo in Fac¸ade, un programma modellante interattivo di facile impiego che permette che l'utente costruisca un modello geometrico con scena dalle fotografie date valori numerici a. In primo luogo descrizione Fac¸ade dal punto di vista dell'utente, allora noi descriviamo la Our representation model and then we explain our process of reconstruction. Finally, we present the results from using Fac ¸ ade to reconstruct several architectural scenes.

2.1 The user's perspective

Building a geometric model with architectural scene using Fac ¸ ade is of direct and incremental process. Typically, the user selects a small number of photographs and models to begin with one piece of the scene at a time. The user can refine the model and include more images in the project until the model meets the desired level of detail.

Fig. 2 (a) and (b) shows the two types of windows used in Fac ¸ ade: image viewer and viewers of the model. You illustrates the components of the model, the edges in the images and mark the edges in the images correspond to the edges in the model. Once taught, Fac ¸ ade computes the sizes and relative positions of the components of the model that best fit the edges marked in the photographs.

The components of the model, called blocks, are parameterized geometrical primitives such as boxes, prisms and surfaces of revolution. A box, for example, is parameterized by its length, width and height. The user models the scene as a collection of these blocks, creating new classes of the block as desired. Of course, the user does not specify numerical values \u200b\u200bfor block parameters', since these sono recuperati dal programma.

L'utente può scegliere di costringere i formati e le posizioni di c'è ne dei blocchi. Nella fig. 2 (b), la maggior parte dei blocchi è stato costretto per avere la lunghezza e larghezza uguali. Ulteriormente, i quattro culmini sono stati costretti per avere la stessa figura. I blocchi possono anche essere disposti nei rapporti costretti ad un altro. Per esempio, molti dei blocchi nella fig. 2 (b) è stato costretto per sedersi concentrato ed in cima al blocco qui sotto. Tali vincoli sono specificati using un'interfaccia grafica 3D. Quando tali vincoli sono forniti, sono usati per facilitare il problema di ricostruzione.

http://www.renderarchitetto.altervista.org/

L'utente contrassegna of edge in the images using an interface point-of-andclick andclick, a slope-gradient-based technique as in [14] can be used to accurately align the edges of the sub-sub-pixel. We use the edge instead of the features because they are easier to locate and less likely to be completely obscured. One section of each board must be marked, allowing use of the partially visible edges. For every deep edge, the user also indicates the corresponding edge in the model. Generally, the reconstructions are obtained if there are exact matches in as many pictures as there are free, and camera model parameters. So, Fac ¸ ade reconstructs esattamente le scene anche quando appena una parte dei bordi visibili e contrassegnata nelle immagini e quando appena una parte dei bordi di modello è data le corrispondenze.

In qualunque momento, l'utente può insegnare al calcolatore per ricostruire la scena. Il calcolatore allora risolve per i parametri del modello che lo inducono ad allineare con le profonde caratteristiche nelle immagini. Durante la ricostruzione, il calcolatore computa e visualizza le posizioni da cui le fotografie sono state prese. Per il ofjust consistente dei modelli semplici alcuni blocchi, una ricostruzione completa richiede soltanto alcuni secondi; per i modelli più complessi, può richiedere alcuni minuti. Per l$questo l$motivo, l'utente può insegnare al calcolatore procedures to employ faster but less accurate reconstruction (see sec. 2.4) during the intermediate stages of modeling.

To verify the accuracy of the recovered camera positions and model, Fac ¸ ade can project the model in the original photographs. Typically, the model deviates from the projected images from less than one pixel. Figure 2 (c) shows the results ofprojecting the edges of the model in Fig. 2 (b) in the original photograph.

Finally, the user can generate novel views of the model by placing a virtual camera to any desired position. Fac ¸ ade then will use the method ditexture-view-view-dependent mapping of Part 3 to make a novel view scene from the desired position. Fig 2 (d) shows an aerial representation of the model of the tower. 2.2 Model Representation

The purpose of our choice of representation model is to represent the scene as a model surface with as few parameters as possible when

roof

the model has few parameters, the user needs to specify a few matches and the computer can reconstruct the model more efficiently. In Fac ¸ ade, the scene is represented as a hierarchical model of constrained parametric polyhedral primitives, called blocks. Each block has a small set of parameters that serve to define its size and shape. Each coordinate of each vertex of the block is then expressed as a linear combination of the parameters of the block, on a coordinated internal structure. For example, to block the wedge in Fig. 3, the vertex coordinates of Po are written in terms of the parameters width, height and length of the block as Po = (~ width, ~ height, length) T. Eachblock is also given a box limiting connected.

The relationship between a block and its parent is represented more generally as a table of rotation R and translation vector T.. This representation requires six parameters: three each for R and T.. In architectural scenes, however, the relationship between two blocks usually has a simple form that can be represented with few parameters and Fac ¸ ade allows the user to build such constraints on R and t in the model. The rotation R between a block and its parent can be specified in one of three ways: first, as rotation is not constrained, requiring three parameters, and secondly, as a rotation about an axis coordinated particular, requiring just one parameter, or third party, such as rotating or fixed anything, requiring no parameters.

Moreover, Fac ¸ ade takes into account the constraints be placed on each component of the translation vector T.. Specifically, the user can force limiting boxes of two blocks, however, to align along each dimension. For example, to ensure that the blocking of the roof in Fig. 4 is top of the first block of history, the user can request that the limit of maximum y of the first block of history be equal to the extent of minimum y block roof. With this constraint, the translation along the axis of y is computed (ty =

(ASCII firststoryM

Roofmate of ~ y INSIDE

y)) rather than represented as a parameter of the model.

exemplified Each parameter of each block is actually a reference to a variable called symbolic, as shown in Fig. 5. therefore, two parameters of different blocks (or the same block) can be identified by having every parameter reference the same symbol. This feature allows the user to identify two or more dimensions in a model, which makes modeling blocks and symmetrical repeating structure more convenient. Importantly, this constraintsreduce the number of degrees of freedom in the model, which, as we will show, it facilitates the recovery problem of the structure.

Letg1 (X):::; GN (X) of rigid transformations representthe associated with each of these links, where X is the vector of all model parameters. The world coordinates Pw (X) of a particular vertex P of the block (X) is then:

Pw (X) = g1 (X)::: GN (X) P (X) (1)

Similarly, vw Guidance the world (X) of a particular line of segmentv (X) is:

vw (X) = g1 (X)::: GN (X) v (X) (2)

In these equations, the vectors of P point and the Pw and vw ve orientation vectors are represented in homogeneous coordinates.

Modeling the scene with the polyhedral blocks, as opposed to points, line segments, clear areas, or polygons, it is convenient for a number of reasons:

Most architectural scenes are modeled well by a provision of primitive geometric .

blocks contain implicitly the common architectural elements such as parallel lines and right angles.

handling the lock is convenient because the primitive are adequately at a high level of abstraction, the various features such as points and lines are less tractable.

A surface model of the scene is readily obtained from the blocks, so there is no need to infer surfaces from discrete characteristics.

models in terms of blocks and relations greatly reduces the number of parameters that the process of reconstruction must recover.

The last point is crucial for the robustness of our reconstruction procedure and the feasibility of our modeling system and is best illustrated with an example. The model in Fig. 2 is parameterized by just 33 variables (the unknown position of the camera aggiunge sei di più). Se ogni blocco nella scena sia non costretto (nelle relative dimensioni e posizione), il modello avrebbe 240 parametri; se ogni linea segmento nella scena sia trattata indipendente, il modello avrebbe 2.896 parametri. Questa riduzione del numero dei parametri notevolmente aumenta la robustezza e l'efficienza del metodo rispetto alla struttura tradizionale dalle procedure di movimento. Infine, dal numero adeguatamente di overconstrain che stato necessario corrispondenze la minimizzazione è approssimativamente proporzionale al numero dei parametri nel modello, questa riduzione significa che il numero delle corrispondenze richieste dell'utente è trattabile.

2.3 Procedura di ricostruzione

La nostra procedura di ricostruzione works by minimizing an objective function O that sums the disparity between the protruding edges of the model and the edges marked in the images, ie O = Perr i wander where i represents the disparity computed for feature i. edge.

Then, the parameters and the positions of unknown camera model are computed by minimizing the O with regard to these variables. Our system uses the error function err by [17], described below.

Fig. 6 (a) shows a straight line in the model projected onto the image plane of a camera. The straight line can be defined by a pair of high voltage vectors, Di where v is the sense of line and d is a point on the line. These vectors can be calculated respectively from equations 2 and 1. The camera position with regard to world coordinates is given in terms of table rotation and Rj tj vector translation. The normal vector denoted by m. in the figure is computed by the following expression:

The projection on the plane of the line image is simply the intersection of the plane m. definedby with the image plane, located at z = ~ f where f is the focal length of the camera. Then, the edge image is definedby equation MXX MYY + MZF of ~ = 0.

Fig. 6 (b) shows how the error between the observed edge f (x1 di immagine; y1); (x2; y2) g e la linea preveduta di immagine è calcolato per ogni corrispondenza. I punti sul segmento osservato del bordo possono essere parametrizzati da una singola variabile scalare s 2 [0; l] dove la l è la lunghezza del bordo. Lasciamo la h essere la funzione che restituisce la più breve distanza da un punto sul segmento, p, al bordo preveduto.

Con queste definizioni, l'errore totale fra il segmento osservato del bordo ed il bordo preveduto è calcolato come:

La funzione obiettiva finale O è la somma dei termini di errore derivando da ogni corrispondenza. Minimizziamo la O using una variante del metodo del Newton-Raphson-Raphson, che coinvolge calcolare la pendenza e la tela di iuta della O with regard to parameters of the camera

and model. As we have indicated, it is easy to construct symbolic expressions for m in terms of unknown model parameters. The procedure for minimizing these different symbolic expressions to evaluate the gradient and hessian after each repetition. The procedure is economical because the expressions for the de la v in equations 2 and 1 have a particularly simple form.

2.4 Computation of the initial estimate

The objective function described in Section 2.3 is part of the non-linear on the parameters of the camera and the model and therefore may have local minima. If the procedure starts at a random position in space parameter, has little chance of convergence to correct solution. To overcome this problem, we developed a direct method to compute a good initial estimate for the parameters and the positions of camera model that is close to correct solution. In practice, our initial method of estimation procedure still enables the non-linear minimization to converge to the correct solution.

Our initial method of estimation consists of two procedures performed in the order. The first procedure evaluates the rotations of the camera while the second evaluates the translations of the camera and the model parameters. Both procedures are considered the initial estimate based uponan equation 3 .. From this equation the following constraints can be reduced by:

m. Rj T v = 0 (5)

m. Trj (tj) of d ~ = 0 (6) As a

uij noted the board m0 measured normal to the plane passing through the center of the camera is: y2

MY1! ~ 0 = ~ f ~ f

From these equations, we see that all edges of the model guidance force known possible values \u200b\u200bfor Rj. Since most of the architectural models contain a lot of those edges (eg vertical and horizontal lines), each rotation of the camera can usually be assessed by the independent model is the model parameters and the position of the independent camera in space. Our method does this by minimizing the following objective function that sums O1 limits to which the rotations Rj violate constraints following equation 5:

the initial estimates for the camera rotations are counted once, the 'Equation 6 is used to obtain initial estimates of the parameters and the positions of camera model. Equation 6 reflects the bond of all those points on the high voltage line definedby tuples i should be a plane with the normal vector m. passing through the center of the camera. This constraint is expressed as follows O2's objective function in which pi (X) and Qi (X) is an expression for the vertices of an edge of the model.

In the special case where all relations of the block in the model have a known rotation, this objective function becomes a simple quadratic form which is easily minimized by solving a set of linear equations.

The initial estimate is obtained once, minimizing non-linear over the entire parameter space is applied to produce the best reconstruction. Typically, the minimization requires less than ten repetitions and record the parameters of the model at most a few percent from initial estimates. The edges of the recovered models typically conform to the original photographs in a pixel.

2.5 Results

Fig. 2 ha shown the results of using Fac ¸ ade to reconstruct a clock tower from a single image. Figs. 7 and 8 show the results of using Fac ¸ ade to reconstruct a building of the High School for twelve photographs. (The original model was constructed from just five images, the remaining images were added to the project with the aim of generating representations using the techniques of Part 3.) That the photographs were taken with a still camera with a calibrated 35mm 50mm standard lens and that you are digitized with the PhotoCD process. The images with a resolution of 1536 pixels of ~ 1024 have been processed to correct for lens distortion, and then were filtered down ~ 512 to 768 pixels for use in system modeling. Fig. 8 shows some views of the recovered camera positions and model and fig. 9 shows a synthetic view of the construction generated by the technique in sec. 3 ..

Fig. 10 shows the reconstruction of another turret from a single photograph. The dome was modeled especially since the procedure does not recover the reconstruction of curved surfaces. The user has forced a block of two hemisphere-two-parameter seating concentrated in the top of the tower and manually registered on the height and width to align with the photograph. Each of the models presented took about four hours to generate.

Structure-Texture-Mapping View-View-Dependent 3

In this section we present the structure-texture-mapping, view-dependent view, an effective method of representation of the scene that the project involves the original photographs on the model. This form of structure-texture-mapping is more effective when the model conforms closely to the real structure of the scene and when the original photographs showing the scene in similar light conditions. In Part 4 we will show how the structure-texture-mapping, view-dependent view can be used in conjunction with stereo-model-based model to produce realistic representations when the model recovered only approximately model the structure of the scene.

Since the camera positions of the original photographs are recovered during the modeling, project images on the model is straightforward. In this section, first we describe how we project a single image on the model and then how to merge several image projections to make the whole model. Unlike the traditional structure-texture-mapping, our method projects the different images on the model according to the user's perspective. As a result, our track-view view-dependent texture can give a better illusion of extra geometry in the model. 3.1 Image Projection

single

The process of structure-texture-mapping on the single image model can be thought of as replacing each camera with a proiettore di diapositive che proietta l'immagine originale sul modello. Quando il modello non è

convex, è possibile che alcune parti del modello ombreggino altri riguardo alla macchina fotografica. Mentre tali regioni ombreggiate potrebbero essere risolute using una procedura di superficie visibile dello oggetto-object-space, o una procedura del pezzo fuso del raggio dello immagine-image-space, usiamo una procedura del programma dell'ombra dello immagine-image-space basata sopra [22] poiché è realizzata efficientemente using i fissaggi dell' z-z-buffer.

La fig. 11, parte di sinistra superiore, mostra i risultati di tracciato dell'immagine singola sul modello della costruzione della High School. La posizione recuperata della macchina fotografica per l'immagine sporgente è indicata nell'angolo di sinistra più basso dell'immagine. A causa dell' auto-self-shadowing, non ogni punto sul modello all'interno del frustum di osservazione della macchina fotografica è tracciato.

3.2 Immagini multiple di Compositing

Ogni fotografia osserverà generalmente soltanto una parte del modello. Quindi, è necessario solitamente da usare le immagini multiple per rendere l'intero modello da un punto di vista novello. Le immagini superiori della fig. 11 mostrano due immagini differenti tracciate sul modello e rese da un punto di vista novello. Alcuni pixel sono colorati in appena uno delle rappresentazioni, mentre alcuni sono colorati in entrambi. Queste due rappresentazioni possono essere merged into a composite representation whereas the corresponding pixels in the rendered views. If a pixel is only one path to making it, its value from that representation is used in the compound. If you track more than one that makes the renderer has to decide which image (or combination of images) to use.

would be convenient, of course, if the pictures hanging agreed at exactly where the same. However, the images do not necessarily will agree if there is unmodeled in particular geometric construction, or if the surfaces of construction exhibit non-Lambertian reflection. In this case, the best image to use is clearly the one with the viewing angle closer to that of having made. However, using the image in the corner closest to each pixel means that the pixels become neighbors can be tested by different original images. When this happens, the specularity and the particular geometric unmodeled can cause visible seams in the representation. To avoid this problem, these smooth transitions to the weighted average as in Fig. 12.

Figure 11: The fabrication process of projecting images to form a composite representation. The two main images of the images show two protruding from their respective positions on the model recovered the camera. The lower left image shows the results of compositing these two representations using our weighting function view-view-dependent. The lower right image shows the results of compositing the representations of all twelve original images. Some pixels close to the front edge of the roof is not seen throughout the image were filled dihole-filling procedure from [23].

Even with this weighting, the neighboring pixels can still be tested by having different image ofaprojected boundary, since the contribution of an image must be zero outside of the boundaries. A

(c)

Figure 12: The weight function used in the layout view, view-dependent texture. Pixel in the virtual view that is the point on the template is assigned a weighted average of corresponding pixels in the real views of 1 and 2 .. The weights are inversely w1 andw2 inversely proportional to the size of the angles a 1 and a2. Alternately, the more specialized ghtingfunctions wei expected based on foreshortening and the image that takes a new sample can be used.

address this, the weights of the pixels are swept down near the boundary of the projected images. Although this method does not guarantee smooth transitions in all cases, we found that eliminates most of the artifacts in the representations and animations as a result of these joints.

If a photograph of the original car undesirable features, the tourist, or other object in front architecture of interest, unwantedobject will be screened on the surface of the model. To prevent this the event, the user can mask off the painted objects on the obstruction with a color reserved. The process of representation, then the weights will govern for all pixels that correspond to the regions masked to zero, and decreases the weights of the pixels near the boundary as before to minimize seams. All regions in the composite image that are occluded in each image are filled using the projection method from dihole-filling [23].

In the discussion so far, the image projected weights are computed for each pixel of each projection representation. Since the weighting function is smooth (Though not constant) through the flat surfaces, generally do not need to be calculated for each pixel of each face of the model. For example, using a single weight for each face of the model, computed at the center of the front, provides acceptable results. Roughly dividing the major fronts, the results are visually indistinguishable from the case where a single weight is calculated for each pixel. More importantly, this technique suggests a performance real-time view-view-dependent structure that trace using a pipe ditexture maps, graphs, projections and views for making the ~ - ~-Channel that the compound to compound.

For complex models in which most of the images are completely occluded for Sight tipica, può essere molto inefficiente da proiettare ogni fotografia originale al punto di vista novello. Alcune tecniche efficienti per determinare tale apriori di visibilità nelle scene architettoniche con la divisione spaziale sono presentate dentro [18].

4 Modello-Ha basato Stereopsis

Il sistema di modellistica descritto nella parte 2 permette che l'utente generi un modello di base di una scena, ma generalmente la scena avrà particolare geometrico supplementare (quali i fregi ed i cornicioni) non bloccato nel modello. In questa sezione presentiamo un nuovo metodo di recupero del tale particolare geometrico supplementare automaticamente attraverso corrispondenza stereo, che denominiamo stereotipia modello-model-based. la stereotipia Modello-Model-based differisce da stereotipia tradizionale in quanto misura come la scena reale devia dal modello approssimativo, piuttosto che provando a misurare la struttura della scena senza alcun'informazione preliminare. Il modello servisce a disporre le immagini in una struttura di riferimento comune che permette la corrispondenza stereo anche per im-

(d)

Figura 13: Tracciato di View-dependenttexture-dependenttexture. (a) Una vista del particolare del modello della High School. (b) una rappresentazione del modelfrom il sameposition using il tracciato vista-view-dependent di struttura. Si noti che anche se il modello non blocca un po'le finestre di ed di incavo, le finestre sembrano messe correttamente perché il programma di struttura è provato especially from a photograph that looked roughly the same way the windows. (C) the same part of the pattern observed from a different angle, using the same program structure in (b). Since the structure is not an image that selectedfrom commented modelfrom approximately the same angle, the windows and recess seem artificial. (D) a more natural result obtained using the track-view view-dependent texture. As the angle of view in (d) is different than in (b), a different composition of the original images is structure-texture-map of usedto model.

age taken from relatively far apart. The information of the stereo correspondence possono allora essere usate per rendere le viste novelle della scena using le tecniche di rappresentazione immagine-image-based.

Come nella stereotipia tradizionale, data due immagini (che denominiamo la chiave e il ofset), la stereotipia modello-model-based computa il programma di profondità collegato per l'immagine chiave determinando i punti corrispondenti nelle immagini di contrappeso e di chiave. Come molte procedure stereo, il nostro metodo correlazione-è basato, in quanto tenta di determinare il punto corrispondente nell'immagine di contrappeso confrontando le piccole vicinanze che del pixel il aroundthe indica. Come tale, le procedure stereo correlazione-correlation-based richiedono generalmente la vicinanza di ogni punto nell'immagine chiave di resemble the corresponding region in the vicinity of its point of balance.

The problem we face is that when the key and offset images are taken from relatively far apart, as it is for our modeling approach, the close matching of the pixels can foreshortened very differently. In figs. 14 (a) and (c), close to the pixel to the right of the key almost horizontally foreshortened by a factor of four in the image offset.

The key observation in the stereo-model-based model is that even if two images of the same scene can look very different, seems like after the screening on an approximate model of the scene. In particular, project the image of a counterweight on the model and observe the position of key produce what we call the deformed image of the OFSET, which looks very similar to the image key. The scene geometrically detailed in Fig. 14 was modeled as two flat surfaces with our modeling program, which also determined the relative positions of the camera. As expected, the image-derived deformation (Fig. 14 (b)) exhibits the same pattern of foreshortening of the image key. In

stereo-model-based model, the proximity of the pixels are compared between the key and warped offset images rather than the key and off-

(a) Key Image (b) Ofset deformed image of (c) Image of Ofset (d) program disparities comp uted.

Figure 14: (a) and (c) two images of the entrance to the chapel of Peterhouse in Cambridge, United Kingdom. Fac ¸ ade of the program was used to model the fac ¸ ade and crush as aflat surfaces and to recover the relative positions of the camera. (B) the deformed image of the OFSET, produced by projecting the image of the OFSET the approximate model and noting the position of the camera key. This projection eliminates most of the key differences that andforeshortening about the image, greatly facilitating the matching stereo. (D) a program of unprecedented inequality produced by our model - a procedure based stereo.

fix the images. When a match is found, it is easy to convert the corresponding relative difference in disparity between the key and offset images, from which point the depth is easily calculated. Figure 14 (d) shows a program disparities computed for the key image in (a).

The reduction of the foreshortening of the differences is just one of several ways that the image of deformed derivation simplifies stereo matching. Some other desirable properties of a branch are deformed:

Any point in the scene that lies on the approximate model will have zero difference between the key image and the image-derived deformation.

The disparity between the key and warped offset images are easily converted into depth program for the key image.

depth estimates are very sensitive to noise in the image because the images of measures taken with respect to different distance can be compared.

places where the model is occluded on the key image can be detected and shown in the image-derived deformation.

linear epipolar geometry (sec. 4.1) exist between the key and warped offset images, despite the deformation. In fact, the epipolar lines derived from distorted image coincide with the epipolar lines dell'immagine chiave.

4.1 La geometria Modello-Model-Based di Epipolar

Nella stereotipia tradizionale, il vincolo epipolar (vedere [6]) è usato spesso costringere la ricerca dei punti corrispondenti nell'immagine di contrappeso alla ricerca seguendo una linea epipolar. Questo vincolo facilita la stereotipia non solo riducendo la ricerca di ogni corrispondenza ad una dimensione, ma anche riducendo la probabilità della selezione dei fiammiferi falsi. In questa sezione mostriamo che quello approfittare del vincolo epipolar è non di più difficile nel caso stereo modello-model-based, malgrado il fatto che l'immagine di contrappeso non-uniformly sia deformata.

La fig. 15 mostra la geometria epipolar per stereotipia modello-model-based. If we consider a point P in the scene, there is a single epipolar plane that passes with the P and the centers of the cameras and offset key. The epipolar plane intersects the image plane and offset key in the epipolar lines and the K and eo. If we consider the projection PK of P on the plane of key image, the epipolar constraint states that the corresponding point in the image must be offset somewhere along the line offset epipolar image. In

stereo-model-based model, the key area in the image are compared to the image-derived deformation rather than the image of a counterweight. So, to use the epipolar constraint, it is necessary to determine where i pixel sulla linea epipolar il progetto dell'immagine di contrappeso nell'immagine di derivazione deformata. L'immagine di derivazione deformata è costituita dalla proiezione dell'immagine di contrappeso sul modello ed allora dal reprojecting il modello sul piano di immagine della macchina fotografica chiave. Quindi, la proiezione po della P nell'immagine di contrappeso proietta sul modello a Q ed allora sui reprojects a qk nell'immagine di derivazione deformata. Poiché ciascuna di queste proiezioni si presenta all'interno dell'aereo epipolar, qualsiasi corrispondenza possibile

http://www.renderarchitetto.altervista.org/

Contrappeso di chiave

Macchina fotografica della macchina fotografica

Figure 15: Epipolargeometryfor model-based stereo.

for key PK in the image must be on the epipolar line of a key junction in the image distorted. In the case where the real structure and the model coincide at P, the bit is projected to P and then reprojected in PK, making a match with zero disparity.

The fact that the epipolar geometry remains linear after the point of deformation also facilitates the use of the bond ordering [2, 6] with a technique of dynamic programming. 4.2 Results and stereo

Rerendering While the point of deformation makes it dramatically easier to determine matches stereo, a stereo procedure is necessary yet really to be determined. The procedure we developed to produce the images in this paper is described in [3].

Once a program of depth was computed for a particular image, we can rerender the scene from novel viewpoints using the methods described in [23, 16, 13]. Furthermore, when several images and their corresponding depth programs are available, we can use the method ditexture-view-view-dependent mapping of Part 3 of the compound multiple representations. The views of the novel fac ¸ ade of the chapel in Fig. 16 were produced with the compositing of four images. 5 Conclusion and future work

Finally, we presented a new, photo-photograph-based approach to modeling and render architectural scenes. Our modeling approach, which combines both geometry-geometry-based image-image-based modeling techniques, is developed by two components that we developed. The first component is an easy to use photogrammet-

Figure 16: Views of the news photographs of the original four generatedfrom scene. These areframes a film where the animated Theface ¸ ade runs continuously. The depth is stereo-model-based model and the structures are made computedfrom compositing the image-image-based representations with the structure-texture-mapping-view view-dependent.

[1]

ric modeling system that facilitates the recovery of a basic geometric model of the scene photographed. The second component is a process-model-based stereo model, which retrieves exactly like the real scene differs from the basic model. For representation, we presented the view-dependenttexture-view-dependenttexture-mapping, which produces the deformed image and compositing multiple views of the scene. The use of Throughjudicious pictures, models and human assistance, our method is more convenient, more accurate and photorealistic methods of geometry-geometry-based image-or image-based modeling of current and representation of architectural scenes in 'environment.

There are several improvements and extensions that can be made to our method. First, the surfaces of revolution is an important component of the architecture (eg, domes, minarets and columns) that are not recovered in our photogrammetric modeling approach. (As noted, the dome in Fig. 10 was graded manually by the user.) Fortunately, there was much work (for example [24]) that current methods of recovery of such structures on the profiles of the image. The geometry of the model curve is also entirely consistent with our method to retrieve the particular with extra-model-based stereo model.

Second, our techniques should be extended to recognize and model the photometric properties of materials in the scene. The system should be able to make the best use of the photographs contained varying light conditions and should be able to make images of the scene as they appear at any time of day, all the time and with any configuration of artificial light. Already, the recovered model can be used to predict the shadowing in the scene with respect to a light source arbitrary. However, a complete treatment of the problem will require the evaluation of photometric properties (ie the distribution bidirectional di riflessione) delle superfici nella scena.

In terzo luogo, è chiaro che l'indagine successiva dovrebbe essere trasformata il problema della selezione che immagini originali da usare quando rendono una vista novella della scena. Questo problema è particolarmente difficile quando le immagini disponibili sono prese alle posizioni arbitrarie. La nostra soluzione corrente a questo problema, la funzione di ponderazione presentata nella parte 3, ancora permette che le giunture compaiano nelle rappresentazioni e che non considera le edizioni in seguito a prelevare di nuovo un campione di immagine. Un'altra forma di selezione di vista è richiesta per scegliere quali accoppiamenti delle immagini dovrebbero essere abbinati per recuperare la profondità nella procedura stereo-model-based model.

Finally, it is clearly attractive to integrate application models generated with the techniques presented in this paper in the coming systems of image-image-based representation in real time. Thanks

http://torino.kijiji.it/c-CV-Cerco-lavoro-Tecnici-Ingegneri-render-architettura-3d-fotorealismo-edilizia-W0QQAdIdZ42557652

This research was supported by grants from a friendship and Search graduate of the National Science Foundation by the Interval Research Corporation, the California MICRO program and the contract F49620-93-C-0014 of JSEP. The authors also wish to thank Tim Hawkins, Charles S'equin, David Forsyth and Jianbo Shi for their help important in the review of this paper. References

http://torino.kijiji.it/c-CV-Cerco-lavoro-Tecnici-Ingegneri-render-architettura-3d-fotorealismo-edilizia-W0QQAdIdZ42557652

Ali Azarbayejani and Alex Pentland. Recursive evaluation of motion, structure, andfocallength. IEEE Transaction PatternAnal. MachineIntell., 1 7 (6): 562-575, June 1995.

HH Baker and TO Binford. The depth from the edge and intensity based stereo. In the acts of the Seventh IJCAI, Vancouver, BC, page 631-636, 1981.

Paul E. Debevec, Camillo J. Taylor and Jitendra Malik. Modeling and rendering architecture from photographs: A method of image-image-based hybrid e della geometria. TechnicalReportUCB//CSD-96-893, U.C. Berkeley, divisione del CS, gennaio 1996. D.J.Fleet, A.D.Jepson e M.R.M. Jenkin. misura Fase-Phase-based di disparità. CVGIP: Comprensione di immagine, 53 (2): 198-210, 1991.

Oliver Faugeras e Giorgio Toscani. Il problema di calibratura per stereotipia. Negli atti IEEE CVPR 86, pagine 15-20, 1986.

Olivier Faugeras. Dispositivo ottico del computer tridimensionale. Il MIT preme, 1993. Olivier Faugeras, Stephane Laveau, Luc Robert, Gabriella Csurka e Cyril Zeller. una ricostruzione di 3 d delle scene urbane dalle sequenze delle immagini. Rapporto tecnico 2572, INRIA, giugno 1995.

W.E.L. Grimson. Dalle immagini a superficie. Il MIT preme, 1981.

Leonard McMillan and Gary Bishop. Plenoptic modeling: A system of image-representation image-based. InSIGGRAPH '95.1995.

Eric N. Mortensen and William A. Barrett. Intelligent scissors for image composition. In SIGGRAPH '95, 1995.

SB Pollard, JEW Mayhew and JP Frisby. A stereo matching procedure using a disparity gradient limit. Perception, 14:449 - 470, 1985.

Szeliski. Image mosaicing for applications that tele-tele-reality. In andApplications IEEE Computer Graphics, 1996.

Camillo J. And David J. Taylor Kriegman. Structure and motion from line segments in multiple images. IEEE transaction model anal. Machine Intell., 17 (11), November 1995.

J. Teller, Celeste Fowler, Thomas Funkhouser and Pat Hanrahan. Calculations of division and ordering large radiosity. In SIGGRAPH '94, pages 443-450, 1994. Carlo Tomasi and Takeo Kanade